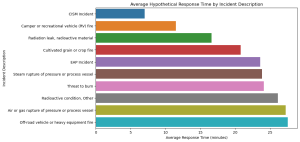

The predictive model developed for forecasting incident frequencies represents a significant step towards utilizing machine learning for emergency management and planning. Employing a RandomForestRegressor, the model leverages historical data on incidents, specifically focusing on the most common incident type across various districts and months. The choice of RandomForestRegressor is advantageous due to its ability to handle complex, non-linear relationships between features and the target variable. It’s also known for its robustness against overfitting, especially when dealing with diverse and heterogeneous data, as is often the case in incident reports.

However, the model’s performance, as indicated by the mean squared error (MSE) of approximately 14845.27, suggests that there is substantial variability between the model’s predictions and the actual incident frequencies. This level of MSE points towards a need for further refinement. While RandomForest is a powerful tool, the complexity of predicting incident frequencies—affected by numerous, often interrelated factors such as seasonal changes, demographic shifts, and urban development—poses a significant challenge. The model currently considers only the district and month, which might be overly simplistic given the multifaceted nature of the problem. Additionally, the interpretability of RandomForest models can be limited, making it harder to extract actionable insights directly from the model’s predictions.

To enhance the model’s performance, several steps could be taken. Incorporating more granular and diverse data, such as weather conditions, special events, and demographic information, could capture a wider array of factors influencing incident frequencies. Experimenting with different machine learning algorithms, including gradient boosting or neural networks, might yield improvements in prediction accuracy. Tuning the model’s hyper parameters through cross-validation and conducting a feature importance analysis could offer deeper insights into the data’s underlying patterns. Despite its current limitations, the model offers a foundational understanding of incident trends and can be instrumental in guiding resource allocation and emergency preparedness strategies. Continuous improvement, driven by additional data and advanced analytical techniques, will be key to enhancing the model’s utility in real-world applications.

- Model Accuracy: A lower MSE value generally indicates a more accurate model. An MSE of 14845.27 suggests that there is room for improvement in the model’s predictions. The actual performance should be contextualized against the range and distribution of the incident frequencies in the dataset.

- Complexity of the Problem: Predicting incident frequencies is inherently complex due to the influence of various unpredictable factors like weather conditions, population density changes, and other socio-economic factors. The current model only considers district and month, which may not capture all the nuances affecting incident frequencies.

- Model Simplicity and Interpretability: The RandomForestRegressor is a robust algorithm known for handling non-linear relationships well, but it can be a ‘black box’ in terms of interpretability. While the model might capture complex patterns in the data, understanding the exact contribution of each feature (like district and month) to the predictions can be challenging.

- Potential for Improvement: The model’s performance could potentially be improved by incorporating additional relevant features (such as weather data, special events, demographic information), tuning hyperparameters, or experimenting with different modeling techniques (like gradient boosting or neural networks). Cross-validation and feature importance analysis could also provide insights for model refinement.

- Practical Implications: Despite its current limitations, the model can still offer valuable insights for emergency services. By identifying trends and patterns in incident frequencies, it can aid in resource planning and allocation, even if the predictions are not perfectly accurate.

In summary, while the current model demonstrates a foundational approach to predicting incident frequencies, there is potential for further refinement to enhance its accuracy and reliability. Continuous evaluation and improvement, guided by domain knowledge and additional data sources, are key to developing a more robust predictive tool.